ABSTRACT

Asian Small-Clawed Otters (Aonyx cinerea) are a small, protected but threatened species living in freshwater. They are gregarious and live in monogamous pairs for their lifetimes, communicating via scent and acoustic vocalizations. This study utilized a hidden Markov model (HMM) to classify stress versus non-stress calls from a sibling pair under professional care.

Vocalizations were expertly annotated by keepers into seven contextual categories. Four of these—aggression, separation anxiety, pain, and prefeeding—were identified as stressful contexts, and three of them—feeding, training, and play—were identified as non-stressful contexts. The vocalizations were segmented, manually categorized into broad vocal type call types, and analyzed to determine signal to noise ratios.

From this information, vocalizations from the most common contextual categories were used to implement HMM-based automatic classification experiments, which included individual identification, stress vs non-stress, and individual context classification. Results indicate that both individual identity and stress vs non-stress were distinguishable, with accuracies above 90%, but that individual contexts within the stress category were not easily separable.

METHODS

Vocalizations were recorded from a sibling pair, one male (Gyan) and one female (Malena) from July of 2014 through March of 2015. This pair were monitored and recorded in the backup area of an aquarium (from 3m) using a Zoom H1 recorder (Zoom Corp., Tokyo, Japan) (sample rate 48000Hz, resolution 32 bits, recording duration 30min) and Schur SM86 microphone (50Hz to 20kHz frequency response with an open circuit sensitivity of -50dB V/Pa). The data had a primary sampling rate of 44.1kHz and an average duration of sound files was 21.65s (r¼33.64s).

EXPERIMENTAL DESIGN AND RESULTS

An analysis of SNR as a function of vocalization type and behavioral context indicated that a study of relatively “clean” vocalizations, defined by SNRs greater than 15dB, would be limited to MS2 vs MS4, i.e., separation anxiety versus prefeeding vocalizations within one female subject. The other three categories—GS2, GS4, and MNS3—contain mostly noisy calls in the 0dB to 5dB SNR range. In addition, most of the GS2, GS4, and MNS3 calls are chirps, while the MS2 and MS4 calls are primarily squeals with a smaller subset of chirps.

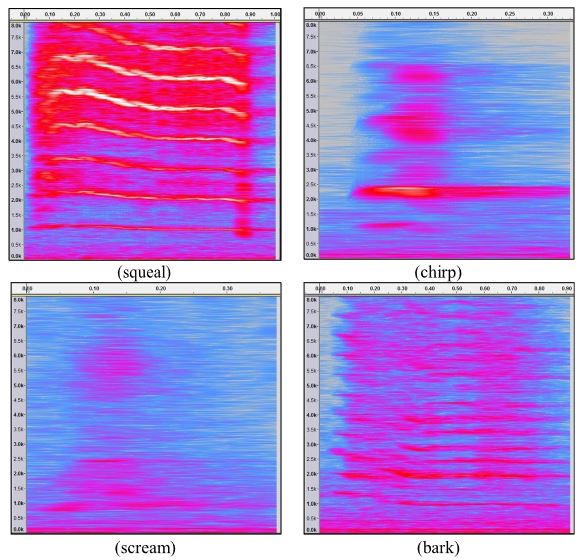

Fig. 1. (Color online) Illustrative spectrograms for the four call categories: (clockwise from top left) squeal; chirp; scream; bark. Horizontal axis indicates time in seconds, and vertical axis indicates frequency in kHz

CONCLUSIONS

This work has presented an initial study of the potential for automatic classification of other vocalizations, with a focus on recognition of behavioral contexts. Knowledge of these vocal patterns as well as tools for automatic differentiation have the potential to offer professional caregivers better mechanisms to acoustically monitor the otters and enhance care giving especially in situations where a family group of more than two otters is being kept.

Potential benefits of vocalization monitoring include identification of aggression within the group, improvement of husbandry behaviors to reduce stress, and better management of environmental noise. Results indicate that both individual identify and stress vs non-stress are vocally distinguishable with accuracies above 90%, suggesting that vocal differentiation of stress is feasible and supporting the need for further continued work in this direction.

Source: University of Cincinnati

Authors: Peter M. Scheifele | Michael T. Johnson | Michel Le Fry | Benjamin Hamel | Kathryn Laclede