ABSTRACT:

Assessing pain levels in animals is a crucial, but time-consuming process in maintaining their welfare. Facial expressions in sheepare an efficient and reliable indicator of pain levels.

In this paper, we have extended techniques for recognising human facial expressions to encompass facial action units in sheep, which can then facilitate automatic estimation of pain levels. Our multi-level approach starts with detection of sheep faces, localisation of facial landmarks, normalisation and then extraction of facial features.

These are described using Histogram of Oriented Gradients, and then classified using Support Vector Machines. Our experiments show an overall accuracy of 67% on sheep Action Units classification. We argue that with more data, our approach on automated pain level assessment can be generalised to other animals.

DATA

Unlike human AU analysis, facial expression recognition of sheep is still an underdeveloped area. Very few datasets are available on sheep and fewer include ground truth labels of facial expressions. In this section, we describe our main dataset and discuss the sheep facial AU taxonomy that is used in our experimental evaluation.

A. Dataset:

We’ve used the same dataset which has been described by Yanget al. This dataset consists of a total of 480 images containing sheep faces. The face bounding boxes are given, yet there are no labels for sheep facial expressions. Therefore, we labelled the facial expressions.

B. AU Taxonomy and Labelling:

Facial Action Units (AUs) has been widely used in human facial expression analysis. Human AUs have been indexed in the Facial Action Coding System (FACS), which forms the standard for automatic analysis of human facial expression and emotion recognition. In contrast, sheep facial expression is yet to be catagorised. We first discuss the sheep AU taxonomy, then present our labelling approach of SFF and SFI datasets accordingly.

METHODOLOGY

In our work, we propose a full pipeline for automatic detection of pain level in sheep. We first present face detection and facial landmark localisation. We then extract appearance descriptors from the normalised facial features, followed by the AU classification.

The overall pain level is estimated based on the classification results of facial features. This pain assessment approach is not specific to sheep and can be generalised to other animals if the proper taxonomies are developed.

A. Face Detection:

We use the Viola-Jones object detection framework to implement the frontal face detection. SSF dataset is used to provide the ground truth. Due to the small number of ground truth images, we adopt a boosting procedure to achieve larger number of training samples. Sheep faces are clipped from the ground truth images with ears excluded, then rotations and intensity deviation are applied to each sheep face.

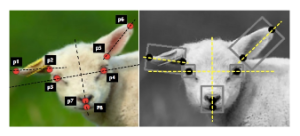

B. Facial Landmark Detection:

The Cascade Pose Regression (CPR) scheme is used for the facial landmark localisation. Due to the sparsely distributed nature of sheep facial landmarks, the TIF approach is adopted in our work. Compared with Robust Cascade Pose Regression (RCPR), which accesses the features on the line segments between two landmarks by linear interpolation, the TIF model is able to draw feature from larger area. The shape indexed feature location is defined as:

p(S,i,j,k,α,β) =yi+(α·−→vi,j+β·−→vi,k)

C. Feature-wise Normalisation:

Normalisation is commonly used in human face recognition to ensure faces taken from various view points are registered and comparable. In our work, feature-wise normalisation is applied on sheep face. Ears, eyes and nose are extracted and normalised separately.

D. Feature Descriptor:

Histogram of Oriented Gradients (HOG) has been widely used as an appearance feature descriptor for human facial expressions. We make use of HOG to describe sheep facial features. We used Dlib implementation of HOGs.

E. Pain level Estimation:

With HOGs extracted and AUs labelled, we use Support Vector Machine (SVM) to train separate classifiers for each facial feature. The overall pain level estimation approach can be described as follows: we first map the predicted AUs to feature-wise pain scores.

EXPERIMENTAL EVALUATION

In this section, we evaluate our approach presented in the previous section. We compare 3-class and 2-class AU classification approaches. We also discuss the effect of data rebalancing as well as the generalisability of our AU classifiers.

CONCLUSIONS

In this paper, we present a multi level approach to automatically estimate pain levels in sheep. We propose a preliminary sheep facial AU taxonomy based on the SPFES. We automate the assessment of facial expressions in sheep by adapting the techniques for human emotion recognition.

We demonstrate that our approach can successfully detect facial AUs and assess pain levels of sheep. Our experiments also show that our AU classifiers are generalisable across different datasets.

FUTURE WORK

For future work, we would like to further explore classifier training with the concatenated feature descriptor to map facial feature directly to pain levels. We would also like to add geometry features as well as appearance features. This will help our AU classifier to be more robust to head pose deviation as well as breed variation.

Larger number of labelled data is needed to further investigate data balancing and generalisation. Ultimately, we would like to test ourautomatic pain assessment approach on different animals. However, this will again require more efforts in data collection and labelling.

Source: University of Cambridge

Authors: Yiting Lu | Marwa Mahmoud | Peter Robinson