ABSTRACT

An application called MIDIFlapper was developed that translates data from the Leap Motion, an NUI device, into MIDI data suitable for use by a contemporary digital audio workstation. This allows electronic musicians to use the Leap Motion for musical creation and live performance.

RELATED WORK

Figure 1: The Leap Motion in use, with onscreen graphic representation of data generated

I was very excited to hear Leap Motion promise exceedingly slim latency times in its promo materials, and was very impressed when I received my dev kit. The Leap’s response time is 50ms at its worst, and decreases from there depending on the Leap’s settings and the capabilities of the user’s video and USB hardware. 30ms is typical.

CONCEPT AND FEATURES

My intention was to design a tool for my- self, one that would fit into my own preferred tool set and workflow as an electronic musician. Since my tool set is typical, I reasoned that if I created a tool that I liked using, many other musicians would find it useful as well.

REQUIREMENTS

Figure 3: The Korg NanoKontrol MIDI controller

Each of the knobs and sliders on the controller seen in Figure 3 produce MIDI values when they are operated by the musician. These MIDI values are interpreted by the DAW and used to set the values of user-mapped parameters to reflect the knob twist. DAWs typically allow the user to as sign a physical control (knob or slider) to an internal parameter (filter cutoff, decay length, etc.) using a “MIDI learn mode” function.

ARCHITECTURE

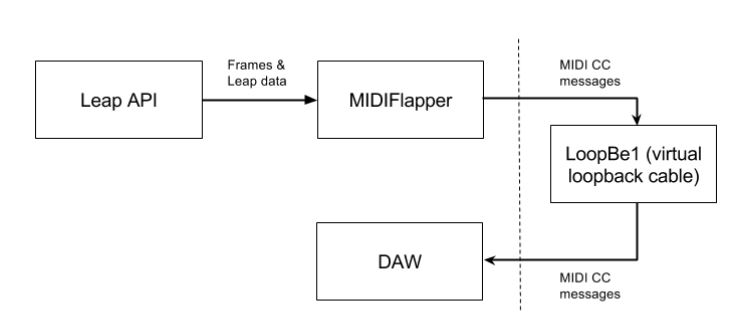

The ultimate goal is to create MIDI data for consumption by the DAW. First, the user interacts with the Leap Motion hardware. This generates Frame objects which MIDIFlapper subscribes to. Based on the user’s preferences, these data are then used to generate MIDI control-change messages which the DAW can interpret as a physical control.

TOOLS

Figure 4: MIDIFlapper’s place in the generation of MIDI data for consumption by the DAW

The Leap API comes in C++ C#, Objective-C, Java, JavaScript, and Python. I chose to implement my app in Java because I am familiar with it, there is cross-platform support, and the standard libraries include MIDI operations. For this project I used my version control solution of choice, git-plus-GitHub, and my Java IDE of choice, IntelliJ IDEA. The app’s GUI is in Java’s Swing. Tests were written in JUnit 4.

DESIGN DECISIONS

Transforms are the heart of the application. A Transform is capable of accepting a Frame object (which originates from the Leap API) and returning a 0-127 value based on the Frame data. For example, a Transform based on the Finger X Axis takes a Finger from the Frame data, examines its position on the x-axis, and normalizes that position between a minimum and a maximum x-axis value to obtain a 0-127 value.

DEVELOPMENT STORIES AND USEFUL FINDINGS

One lesson I learned and put to immediate concrete use was when to use an interface and when to use a concrete class. Obviously an abstract class can define data and behavior whereas an interface cannot, but what does this mean on a pragmatic level? An interesting and useful heuristic is that an abstract class represents an is-a relationship, whereas an interface represents a can-do-this relationship.

FUTURE DEVELOPMENT

Late in development, once I had added a large number of Controls to the control panel, my application began to frequently trigger LoopBe1’s flood/short-circuit detection. LoopBe1, the virtual MIDI loopback cable my project relies on, mutes the cable if a certain bandwidth of MIDI data is exceeded. In order to unmute, the user has to click LoopBe1’s taskbar icon and use a dialog box. In a working setting, this is an unacceptable annoyance; in a performance setting, this would bring the performance to a disastrous halt. The short-circuit detection was triggered almost immediately every time the user’s hand entered the Leap’s detection radius.

CONCLUSIONS

The Microsoft Kinect promised a lot and didn’t deliver. In the wake of that disappointment, I was guarded about the claimed abilities of the Motion. However, I was surprised at the accuracy of the detection, the low latency, and the cleanliness of the API. It’s ideal for this kind of project. However, it is billed as a replacement for more typical interaction interfaces, and I think that that’s inappropriate. It cannot beat mouse, keyboard or touchscreen at their own games—nothing beats a mouse’s pointing ability, and nothing types like a keyboard. However, nothing does 3D hand-tracking like the LEAP does, and that is why it succeeds for my application.

Source: California Polytechnic State University

Authors: Mark Henry | Franz J. Kurfess

>> Simple Java Projects with Source Code Free Download and Documentation