ABSTRACT

In this work, we present an unobtrusive and non-invasive perception framework based on the synergy between two main acquisition systems: the Touch-Me Pad, consisting of two electronic patches for physiological signal extraction and processing; and the Scene Analyzer, a visual-auditory perception system specifically designed for the detection of social and emotional cues.

It will be explained how the information extracted by this specific kind of framework is particularly suitable for social robotics applications and how the system has been conceived in order to be used in human-robot interaction scenarios.

MATERIAL & METHODS

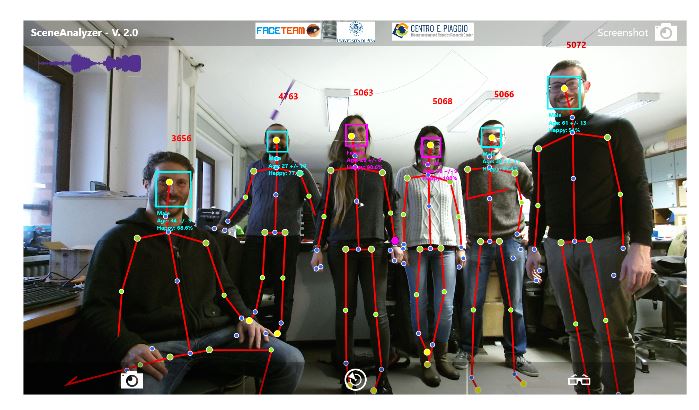

Figure 1. Screenshot of the SA (Scene Analyzer) visualiser in a case of crowded scenario

The software provides also facial expression estimation, age and gender assessment—even for more than 6 persons-speaker identification, proximity, noise level and much more (Figure 1). Thanks to its modular structure, SA can be easily reconfigured and adapted to different robotic frameworks by adding or removing its perceptual modules.

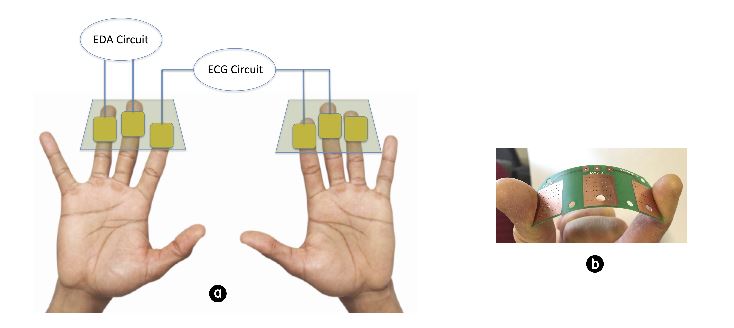

Figure 3. Schema of the acquisition patches

The IBI signal, representing the time in millisecond between two consecutive R waves of the electrocardiography (ECG), is an important parameter for the study of ECG correlates, like heart rate (HR) and heart rate variability (HRV), and is usually acquired with disposable Ag/AgCl electrodes placed in the torax area. The idea was to develop an architecture in which the use of the user fingers for the simultaneous acquisition of EDA and IBI parameters could be combined (as shown in Figure 3).

EXPERIMENTS

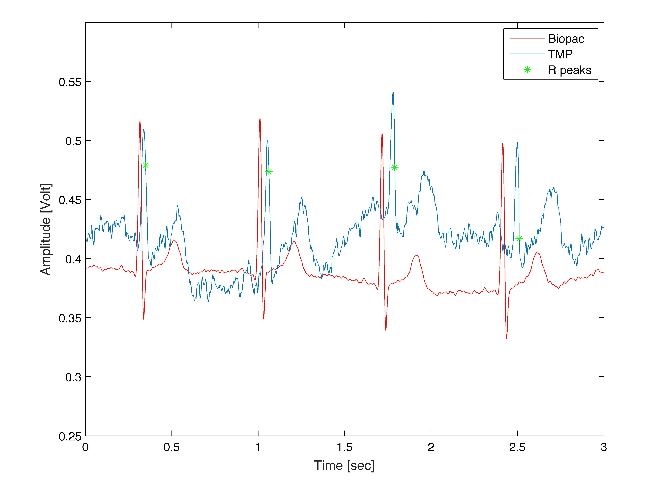

Figure 8. Synchronised ECG signals acquired from thorax region with Biopac instrument (red line) and from finger site with TMP system (blue line)

Seven users were asked to attach Biopac Ag/AgCl electrodes in the thorax area and then to put their fingers in the TMP sensor in order to acquire ECG signals from both systems at the same time, during static position. Figure 8 shows the collected signals after being time aligned and then elaborated using a dedicated software tool (WFDB) available from Physionet.

DISCUSSION

The maintenance of unique individual IDs for different subjects, demonstrated in the first experiment, allows the usage of the presented perceptual framework for making comparisons among different subjects present in the same scene, but also to compare data of different sessions performed with the same subject.

This property, coupled with the ability to extract social information from natural interaction scenarios, makes this system a particularly suitable tool for social robotics. Especially in that kind of applications in which a long-term study is needed, such as in educational contexts, where having the possibility to perform personalized learning is mandatory.

FUTURE WORK

The presented perception system needs to be tested applying several variations, e.g., stressing the occlusion occurrence, retesting with multiple subjects and multiple repetition of the presented experiment. Although the system has been already validated, these further experiments will lead to more robust results. The perceptual framework is already used in the context of the EASEL European Project, from which has been partially founded, and several experiments are in progress.

CONCLUSIONS

In this work, an unobtrusive perception framework for social robots has been presented and tested. The system has been designed exploiting the synergy between two main acquisition systems: the audiovisual acquisition system called Scene Analyzer, and the electronic patches for physiological signal extraction called Touch-Me Pad.

In order to maximize the naturalness of the social interaction, and according to the importance of embodiment, all the sensory devices have been physically integrated in the FACE humanoid robot, an hyper-realistic android with which we performed two experiments. With these experiments, we tested the maintenance of the tracking of the subjects during the human-robot interaction regardless which part of the perceptual interface was in charge for the acquisition, and the effective integration of all the gathered information in a unique data-set called meta-scene.

A meta-scene can be considered as the output of our perception framework and the input for potential cognitive systems that thanks to the presented framework will have the chance to analyze a complete collection of data about the social environment in which the social robot is involved. This will lead to an important improvement in social robotics, making the human behavior understanding more reliable even in crowded and noisy environment and in applications where long-term interactions have to be studied.

Source: University of Pisa

Authors: Lorenzo Cominelli | Nicola Carbonaro | Daniele Mazzei | Roberto Garofalo | Alessandro Tognetti | Danilo De Rossi